There are rules people must agree to before joining Unloved, a private discussion group on Discord, the messaging service popular among players of video games. One rule: “Do not respect women.”

For those inside, Unloved serves as a forum where about 150 people embrace a misogynistic subculture in which the members call themselves “incels,” a term that describes those who identify as involuntarily celibate. They share some harmless memes but also joke about school shootings and debate the attractiveness of women of different races. Users in the group — known as a server on Discord — can enter smaller rooms for voice or text chats. The name for one of the rooms refers to rape.

In the vast and growing world of gaming, views like these have become easy to come across, both within some games themselves and on social media services and other sites, like Discord and Steam, used by many gamers.

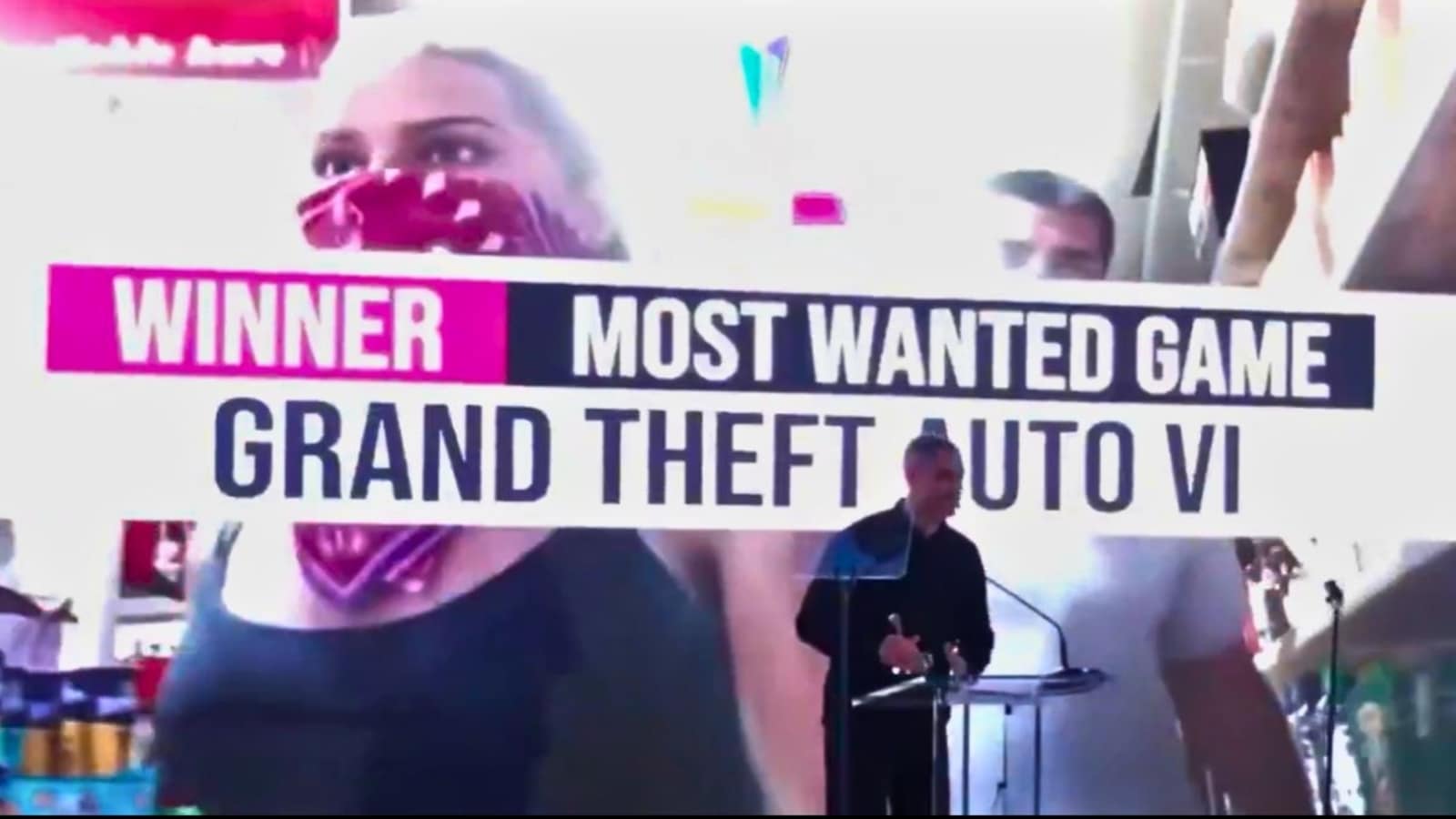

The leak of a trove of classified Pentagon documents on Discord by an Air National Guardsman who harbored extremist views prompted renewed attention to the fringes of the $184 billion gaming industry and how discussions in its online communities can manifest themselves in the physical world.

A report, released on Thursday by the NYU Stern Center for Business and Human Rights, underscored how deeply rooted misogyny, racism and other extreme ideologies have become in some video game chat rooms, and offered insight into why people playing video games or socializing online seem to be particularly susceptible to such viewpoints.

The people spreading hate speech or extreme views have a far-reaching effect, the study argued, even though they are far from the majority of users and occupy only pockets of some of these service. These users have built virtual communities to spread their noxious views and to recruit impressionable young people online with hateful and sometimes violent content — with comparatively little of the public pressure that social media giants like Facebook and Twitter have faced.

The center’s researchers conducted a survey in five of the world’s major gaming markets — the United States, Britain, South Korea, France and Germany — and found that 51 percent of those who played online reported encountering extremist statements in games that featured multiple players during the past year.

“It may well be a small number of actors, but they’re very influential and can have huge impacts on the gamer culture and the experiences of people in real world events,” the report’s author, Mariana Olaizola Rosenblat, said.

Historically male-dominated, the video game world has long grappled with problematic behavior, such as GamerGate, a long-running harassment campaign against women in the industry in 2014 and 2015. In recent years, video game companies have promised to improve their workplace cultures and hiring processes.

Gaming platforms and adjacent social media sites are particularly vulnerable to extremist groups’ outreach because of the many impressionable young people who play games, as well as the relative lack of moderation on some sites, the report said.

Some of these bad actors speak directly to other people in multiplayer games, like Call of Duty, Minecraft and Roblox, using in-game chat or voice functions. Other times, they turn to social media platforms, like Discord, that first rose to prominence among gamers and have since gained wider appeal.

Among those surveyed in the report, between 15 and 20 percent who were under the age of 18 said they had seen statements supporting the idea that “the white race is superior to other races,” that “a particular race or ethnicity should be expelled or eliminated” or that “women are inferior.”

In Roblox, a game that allows players to create virtual worlds, players have re-enacted Nazi concentration camps and the massive re-education camps that the Chinese Communist government have built in Xinjiang, a mostly Muslim region, the report said.

In the game World of Warcraft, online groups — called guilds — have also advertised neo-Nazi affiliations. On Steam, an online games store that also has discussion forums, one user named themselves after Heinrich Himmler, the chief architect of the Holocaust; another used Gas.Th3.J3ws. The report uncovered similar user names connected to players in Call of Duty.

Disboard, a volunteer-run site that shows a list of Discord servers, includes some that openly advertise extremist views. Some are public, while others are private and invitation only.

One, called Dissident Lounge 2, tags itself as Christian, nationalist and “based,” slang that has come to mean not caring what other people think. Its profile image is Pepe the Frog, a cartoon character that has been appropriated by white supremacists.

“Our race is being replaced and shunned by the media, our schools and media are turning people into degenerates,” the group’s invitation for others to join reads.

Jeff Haynes, a gaming expert who until recently worked at Common Sense Media, which monitors entertainment online for families, said, “Some of the tools that are used to connect and foster community, foster creativity, foster interaction can also be used to radicalize, to manipulate, to broadcast the same kind of egregious language and theories and tactics to other people.”

Gaming companies say they have cracked down on hateful content, establishing prohibitions of extremist material and recording or saving audio from in-game conversations to be used in potential investigations. Some, like Discord, Twitch, Roblox and Activision Blizzard — the maker of Call of Duty — have put in place automatic detection systems to scan for and delete prohibited content before it can be posted. In recent years, Activision has banned 500,000 accounts on Call of Duty for violating its code of conduct.

Discord said in a statement that it was “a place where everyone can find belonging, and any behavior that goes counter to that is against our mission.” The company said it barred users and shut down servers if they exhibited hatred or violent extremism.

Will Nevius, a Roblox spokesman, said in a statement, “We recognize that extremist groups are turning to a variety of tactics in an attempt to circumvent the rules on all platforms, and we are determined to stay one step ahead of them.”

Valve, the company that runs Steam, did not respond to a request for comment.

Experts like Mr. Haynes say the fast, real-time nature of games creates enormous challenges to policing unlawful or inappropriate behavior. Nefarious actors have also been adept at evading technological obstacles as quickly as they can be erected.

In any case, with three billion people playing worldwide, the task of monitoring what is happening at any given moment is virtually impossible.

“In upcoming years, there will be more people gaming than there would be people available to moderate the gaming sessions,” Mr. Haynes said. “So in many ways, this is literally trying to put your fingers in a dike that is ridden by holes like a massive amount of Swiss cheese.”